Why photographers should explore Machine Learning

I never thought I would write a blog post about machine learning, after all, I'm a professional photographer, with zero background in coding or computer science - I didn’t even go to college! However about two years ago, I decided to learn the basics of coding to build an app prototype. After all - how hard can it be? (Pretty hard it turns out)

After a couple of months of YouTube tutorials, I managed to build a scrappy camera app that could take photos (although I hadn’t figured out how to save them). One day I came across a tutorial on how to build the “not-hot dog” app, an app made famous in the TV show Silicon Valley. The basic idea is to create a dataset containing images of hot dogs, then use a machine learning model to “see” if what is captured is a hot dog or not. The usability of the app was of course pretty limited, but I realized the potential machine learning could have in creating more interactive photography projects, especially if hot-dogs were replaced with something else.

“I realized the potential machine learning could have in creating more interactive photography projects, especially if hot-dogs were replaced with something else.”

At the beginning of the 20th century, Picasso and George Braque revolutionized painting by introducing Cubism. Marcel Duchamp, also known as the father of conceptual art, believed that despite the sweeping revolution, painting was still a purely “retinal” affair - something whose appeal was directed solely to the eye. In other words, something that’s visually appealing but lacks deeper meaning. As a response to the “retinal” art, Duchamp went on to create readymades which shocked the world and expanded the notion of what art could be.

If there’s one platform that’s been the champion of the “retinal” in today’s world it would be Instagram. The platform was built around the idea that your iPhone photos could look professional by slapping filters on them (an idea much despised by professional photographers). On Instagram, photography is purely a shiny object (preferably in millennium pink) with very little room for connecting ideas and concepts to the photos. Sure you could add animations or write captions but why would anyone read those when there are a million photos just screaming for your attention a scroll away.

“On Instagram, photography is purely a shiny object (preferably in millennium pink) with very little room for connecting ideas and concepts to the photos.”

What really makes me excited about machine learning and more specifically computer vision is the capability to transform photography from a “retinal” artform into a truly interactive one. With the help of machine learning, photographers can communicate concepts and ideas that go beyond gimmicky filters and effects. Kind of like mixing photography, video, performance, installation, and conceptual art into a single medium.

For me, this revelation started with the hot-dog app but quickly grew into something more ambitious as I switched the dataset from hot-dogs to my own images. I didn’t include my images to help me identify them or anything instead it the decision was based on years of growing frustration. As we gain more experience and knowledge, performing creative tasks becomes easier and easier. Once we reach a certain level, we tend to go into creative auto-pilot mode. I became curious about what my work would look like if I outsourced the creative decision-making process to a machine learning algorithm.

“I became curious about what my work would look like if I outsourced the creative decision-making process to a machine learning algorithm”

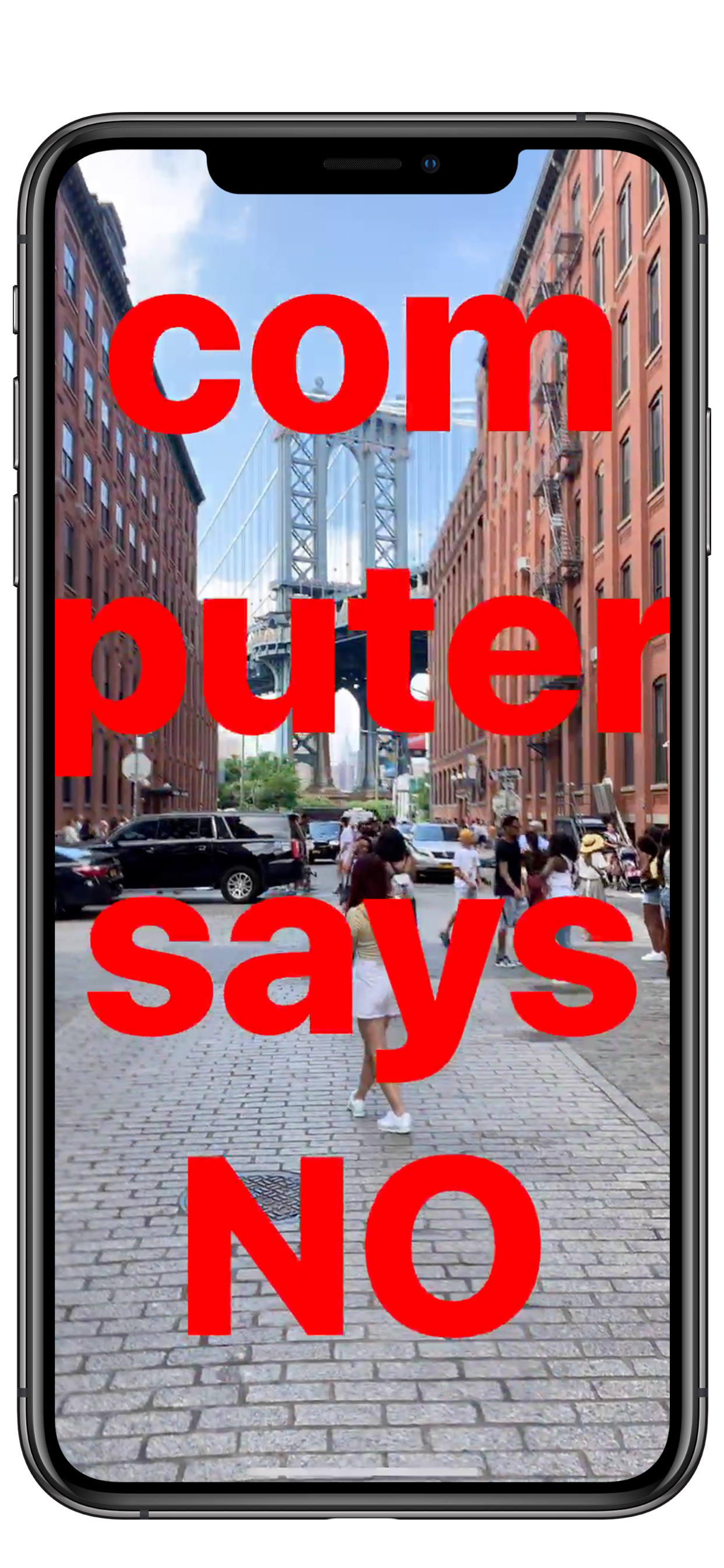

To accomplish this, I built a camera app that on the surface looks very similar to the iPhone’s native camera app. However, in the background is a machine learning model called “feature extraction”. The model analyzes the frames coming from the viewfinder and decides whether or not the photo about to be captured is too similar (unoriginal) to those already taken by the user. If the answer is yes - the app removes the capture button rendering it impossible to take the photo and displays a rather annoying message. The user is then forced to choose a different angle or approach in order to capture the image. The point is not to take “better” photos but to pause the creative auto-pilot and challenge the creative decision-making process.

Sort of like reversing the learning curve making it harder and harder to come up with original solutions to capture what’s around you. The result is a quite frustrating journey sprinkled with bits of occasional satisfaction. The experience can be described as “performance art meets photography”, where the user is prevented from relying on past experiences, creating a feeling of learning something for the first time. The camera app/bot project is called Svetlana in honor of my very stoic third-grade gymnastics teacher who believed that shortcuts are for lazy people.

“The camera app/bot project is called Svetlana in honor of my very stoic third-grade gymnastics teacher who believed that shortcuts are for lazy people. ”

At this point you might ask yourself, what does this have to do with Duchamp and his crusade against “retinal art”? Well, until recently there haven’t been many tools available for photographers interested in creating work that primarily non-retinal or “to service the mind” as Mr. Duchamp would have put it. But with the capabilities of machine learning, it’s all of a sudden possible to create work that stretches beyond the limits of the medium while still retaining the accessibility and familiarity that photography offers.

I will be the first to admit that learning code without any previous experience can at times be an uphill battle, especially if you had a C in math! However, the developer community can be very helpful as long as you show a genuine interest in learning the craft. Also, full disclosure I co-created the anti-social media app minutiae a few years ago, and even though the coding was done by professional developers, understanding the complexity of creating an app proved to be a very valuable experience. Even though the learning curve is still quite steep when it comes to code and Machine Learning for those who have patience (and time) the reward can be a newfound sense of creative freedom.

Svetlana in action

So is there a label for photography projects that put ideas ahead of visual representation? “Non-Retinal”, “New Photography”, “Computational Photography”, “New New Photography” or “Photography 2.0” ?? I’m not sure but whatever label the future gives it, Machine Learning will be just as revolutionary to photography as readymades were to the art world a century ago.

Interested in testing the app?

Request a beta invite here.